Brief History of Cryptography

Ancient Times

The roots of cryptography are found in Roman and Egyptian civilizations. Cryprography (from Ancient Greek 'kriptos' - "hidden, secret" and 'graph' - "to write" ) is the study of "mathematical" systems for solving two kinds of security problems: privacy and authentication [1]. Cryptography has been used from 1900 BC as a form of communication.

"The earlier Roman method of cryptography, popularly known as the Caesar Shift Cipher, relies on shifting the letters of a message by an agreed number (three was a common choice), the recipient of this message would then shift the letters back by the same number and obtain the original message [2]. Since this technique used one alphabet it was easy to break."

"The first known polyalphabetic cipher is believed to be the Alberti cipher by Leo Battista Alberti from around 1467. This cipher used multiple alphabets to encrypt a message. Alberti switched alphabets many times during the encryption of a message. He indicated that the alphabet should be changed by including an uppercase letter or a number in the plaintext. In 1470, Alberti developed a cipher disc to make encrypting and decrypting easier [3]."

"Another variation of the Alberti cipher is the Vigenère cipher devised by Blaise de Vigenère. The Vigenère cipher was used during the American Civil War [3]."

Sources: [1] New Directions in Cryptography,

[2] Origins of Cryptography,

[3] S. Haunts, Applied Cryptography in .NET and Azure Key Vault,

Chapter 2: A Brief History of Cryptography

Wartime

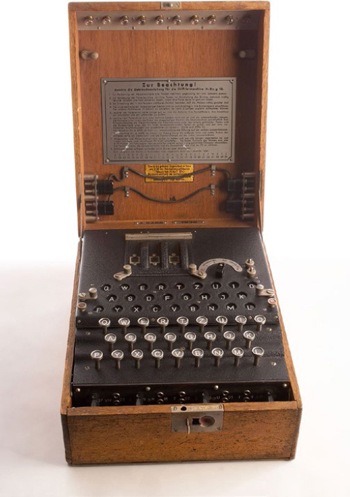

"All ciphers discussed above were manual and handled by a person with a pen and paper. The twentieth century saw cryptography become machine automated. The most famous example is the Enigma Electronic Rotor machine used during World War II.The Enigma machine resembled a typewriter. It featured a series of mechanical rotors, a keyboard, an illuminated series of letters and a plug board that allowed you to insert short cables. The Enigma machine came with a set of five rotors, three of which were used in the machine [1]."

There were lots of variations of the Enigma machine. Different versions of these machine were used by the United Kingdom, Germany and USA at the different time of the World War II.

Sources: [1] S. Haunts, Applied Cryptography in .NET and Azure Key Vault,

Chapter 2: A Brief History of Cryptography,

[2] Central Intelligence Agency

Public and Private Key Cryptography

"In 1973, a German mathematician named Horst Feistel published an article titled "Cryptography and Computer Privacy" in Scientific American magazine. The article discussed a new form of cryptography, which became known as a Feistel network. The Feistel network became the basis for many of the modern cryptographic algorithms in use today. The most popular is the Data Encryption Standard (DES), which was published in 1997 by the National Bureau of Standards (NBS) in a joint venture with IBM, where Horst Feistel worked.The DES algorithm is called a symmetric encryption algorithm, which means that the same key used to encrypt data is the same key that is used to decrypt the data. This is similar, in principle, to polyalphabetic ciphers.

...Although the DES standard was set by NBS and IBM, the National Security Agency (NSA) insisted on modifications to the algorithm. The most prominent was changing the key size from 128 bits to 56 bits. NSA enforced modifications to give them a better chance at breaking the algorithm using special computer equipment. The reduction in key size eventually undid DES. Since the key length was reduced to 56 bits, and the Feistel network worked on 64-bit blocks, eight additional parity bits needed to be added to the input data because it is split to feed onto the blocks.

The DES algorithm is called a symmetric encryption algorithm, which means that the same key used to encrypt data is the same key that is used to decrypt the data. This is similar, in principle, to polyalphabetic ciphers. In 1997, a competition called the DES Challenge (DESCHALL) ended after 140 days of trying to break a DES algorithm through a massive distributed computing effort on the Internet. It was done by a brute-force attack, trying different key combinations out of a total key space of 72 quadrillion keys. The attack worked by having a single server controlling the keys. This server acted as the brain for the entire operation. Each computer that took part in the challenge had to ask for a range of keys from the key server and then report their result. The key server also logged the unique IP addresses of the machines involved and reported that over 78,000 individual machines took part in the challenge. Even though DES was cracked and compromised, a variation of DES called Triple DES, or 3DES, uses three iterations of DES to encrypt data.

The algorithms discussed above are symmetrical algorithms, which means that they use the same key for encryption and decryption. The drawback of these algorithms is that it is very hard to share the keys between multiple parties.

In 1977, Ron Rivest, Adi Shamir, and Len Adleman created RSA, the most common viable alternative to symmetrical algorithm, which uses public and private key. The algorithm's name, RSA, is the initials of each of their surnames.

There is a limitation to RSA, though, in the amount of data you can encrypt in one go. You cannot encrypt data that is larger than the size of the key, which is typically 1024 bits, 2048 bits, or 4096 bits. You could break down your data into smaller chunks and encrypt it, but this is inefficient. One of the topics that we tackle later in the book is using RSA and AES together to build a hybrid encryption scheme."

Source: S. Haunts, Applied Cryptography in .NET and Azure Key Vault,

Chapter 2: A Brief History of Cryptography

Pretty Good Privacy (PGP)

“The biggest threat to privacy that we face is that the power of computing is doubling every 18 months. The human population is not doubling every 18 months but the ability for computers to keep track of us is [1],” says Phil Zimmerman, reminding us of Moore’s Law.Zimmerman is the inventor of Pretty Good Privacy, which is software that provides data communication privacy that uses RSA encryption over the internet. PGP could be used on anybody's personal computer instead of just a giant machines using in government and businesses.

"Pretty Good Privacy is an encryption system used for both sending encrypted emails and encrypting sensitive files. Since its invention back in 1991, PGP has become the de facto standard for email security.

The popularity of PGP is based on two factors. The first is that the system was originally available as freeware, and so spread rapidly among users who wanted an extra level of security for their email messages. The second is that since PGP uses both symmetric encryption and public-key encryption, it allows users who have never met to send encrypted messages to each other without exchanging private encryption keys [2]."

Sources: [1] A Short History of Cryptography,

[2] What is PGP Encryption and How Does It Work?

Why Is Cryptography Important?

"Cryptography allows people to have the same confidence they have in the real world in the electronic world. It enables people to do business electronically without worry of wrongdoing by others. Every day, millions of people interact electronically, whether it is through email, ecommerce (on sites like Amazon), or on ATMs or cellular phones. The significant increase of information transmitted over the Internet or on private networks has led to an increased reliance on cryptography.

Cryptography makes the Internet more secure and the safe transmission of electronic data possible. For a website to be protected, all the data transmitted between the computers must be encrypted. This allows people to do online banking and online shopping with their credit cards, without worrying that any of their account information is being compromised. Cryptography is essential to the continued growth of the Internet and electronic commerce."

Source: S. Haunts, Applied Cryptography in .NET and Azure Key Vault,

Chapter 2: A Brief History of Cryptography

Open-source Software

"The printer story"

"'Free software' is a matter of liberty, not price. To understand the concept, you should think of 'free' as in 'free speech,' not as in 'free beer'." —Richard M. Stallman

"It all began in 1980 when Richard Stallman, now the founder of the Free Software Foundation, wanted to fix the malfunctioning laser-printer at the Massachusetts Institute of Technology (MIT) Artificial Intelligence Lab, where he worked as a software programmer. When he asked someone for the "source code" of the program running the printer, he was, to his surprise, refused because the software had been released under a nondisclosure agreement."(You can read the full story here: Transcript of Richard M. Stallman's speech, "Free Software: Freedom and Cooperation")

"Since its early inception it was part of the hacker culture to share software. This was the method that the software community employed to develop, use, and to improve a variety of computer programs. When someone found a problem, a "bug," he/she would fix it, and then make the new version of the program available to the community so that others could further develop it. And this was true not only for those working at universities or other public institutions. Even private hardware manufacturers made the programs that they developed freely available to their own advantage, so that hackers would use them, report bugs, and even make further improvements on the programs."

GNU

"The GNU operating system is a complete free software system, upward-compatible with Unix. GNU stands for “GNU's Not Unix.” It is pronounced as one syllable with a hard g. Richard Stallman made the Initial Announcement of the GNU Project in September 1983. A longer version called the GNU Manifesto was published in March 1985. It has been translated into several other languages.""The project to develop the GNU system is called the “GNU Project.” The GNU Project was conceived in 1983 as a way of bringing back the cooperative spirit that prevailed in the computing community in earlier days—to make cooperation possible once again by removing the obstacles to cooperation imposed by the owners of proprietary software."

"In 1971, when Richard Stallman started his career at MIT, he worked in a group which used free software exclusively. Even computer companies often distributed free software. Programmers were free to cooperate with each other, and often did. By the 1980s, almost all software was proprietary, which means that it had owners who forbid and prevent cooperation by users. This made the GNU Project necessary."

"GNU is a Unix-like operating system. That means it is a collection of many programs: applications, libraries, developer tools, even games."

"The program in a Unix-like system that allocates machine resources and talks to the hardware is called the “kernel”. GNU is typically used with a kernel called Linux. This combination is the GNU/Linux operating system. GNU/Linux is used by millions, though many call it “Linux” by mistake. GNU's own kernel, The Hurd, was started in 1990 (before Linux was started). Volunteers continue developing the Hurd because it is an interesting technical project." (Stallman)

Why Software Should Not Have Owners

Free Software Is Even More Important Now

Source: gnu.org

Linux

Linux Operating System is originally developed by Linus Torvalds in 1991. Over the past 20 years, more than 1300 releases ranging from versions 1.0 to 4.1 were put out [1].“Linux” often refers to a group of operating system distributions built around the Linux kernel. In the strictest sense, though, Linux refers only to the presence of the kernel itself. To build out a full operating system, Linux distributions often include tooling and libraries from the GNU project and other sources [2]."

While Richard Stallman was working on GNU project, "another developer was at work on a free alternative to Unix: Finnish undergraduate Linus Torvalds. After becoming frustrated with licensure for MINIX, Torvalds announced to a MINIX user group on August 25, 1991 that he was developing his own operating system, which resembled MINIX. Though initially developed on MINIX using the GNU C compiler, the Linux kernel quickly became a unique project with a core of developers who released version 1.0 of the kernel with Torvalds in 1994 [2]."

"Torvalds had been using GNU code, including the GNU C Compiler, with his kernel, and it remains true that many Linux distributions draw on GNU components. Stallman has lobbied to expand the term “Linux” to “GNU/Linux,” which he argues would capture both the role of the GNU project in Linux’s development and the underlying ideals that fostered the GNU project and the Linux kernel. Today, “Linux” is often used to indicate both the presence of the Linux kernel and GNU elements. At the same time, embedded systems on many handheld devices and smartphones often use the Linux kernel with few to no GNU components [2]."

Sources: [1] Evolution of Linux Operating System,

[2] A Brief History of Linux

The relationship between GNU nad Linux is well explained by Richard Stallman and Linus Torvalds themselves in the documentary History of Gnu, Linux, Free and Open Source Software (Revolution OS).

GitHub

"GitHub is one of the largest social coding platforms on the web. Its collaborative features allow GitHub users to follow each other’s code developments, build off each other’s work, and make it easier than ever to create open source software [1].""Development of the GitHub platform began on 1 October 2007. The site was launched in April 2008 by Tom Preston-Werner, Chris Wanstrath, and PJ Hyett after it had been made available for a few months prior as a beta release [2]."

"What GitHub really did for open-source is it kind of standardized the way that people can contribute to open-source project and interface with them. And so that any developer, anywhere on earth knew how to contribute to a project on GitHub. And that fed this explosion of open-source activity (Nat Friedman, CEO, GitHub, [3])."

Sources: [1] Mining the Social Web - GitHub,

[2] The untold story of Github,

[3] The Rise Of Open-source Software

Centalization and Decentralization

ARPA and Arpanet

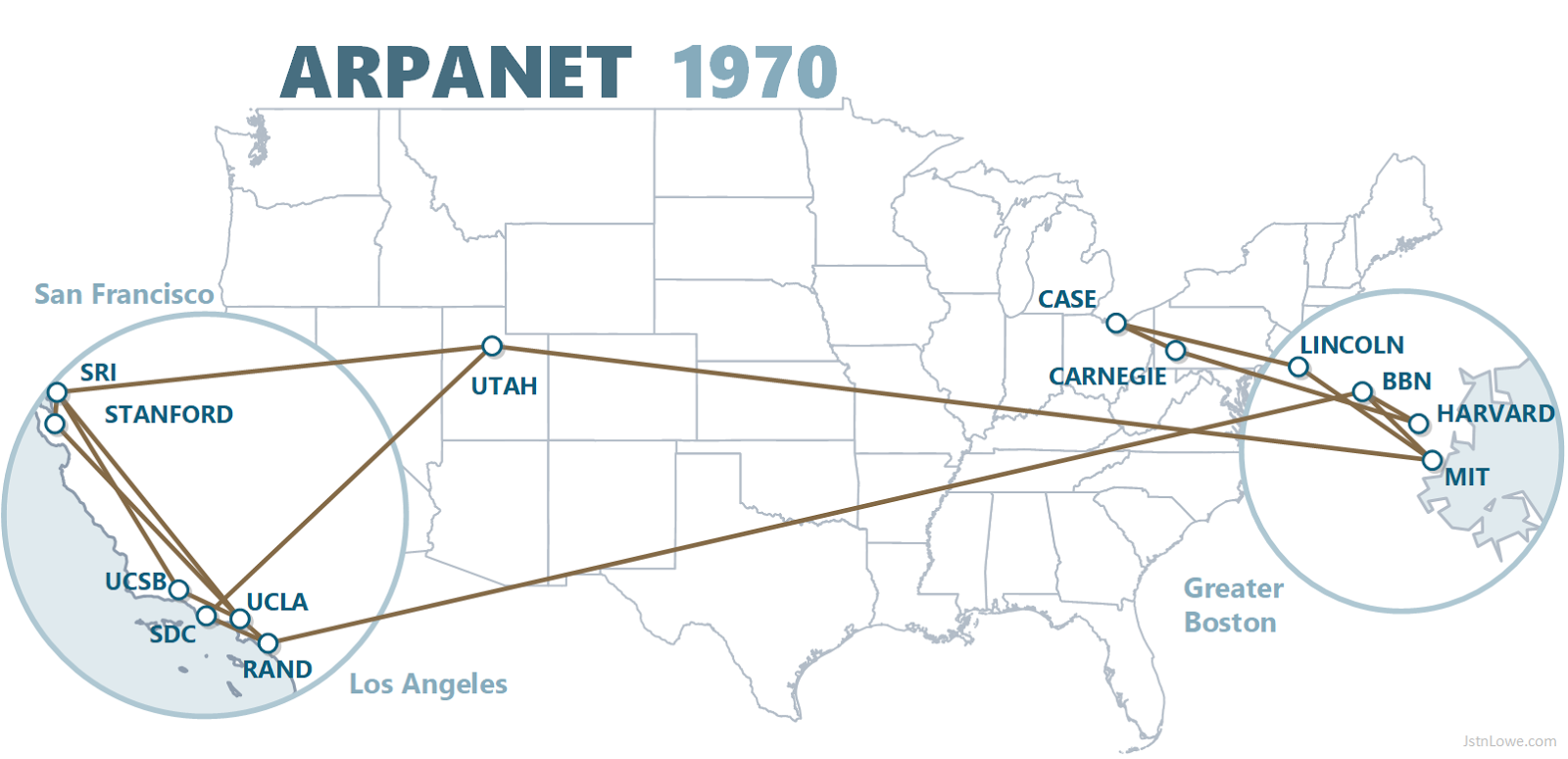

In 1957, the Soviet Union launched Sputnik 1 beating the U.S. to what would be known as the space race. As a result of this loss the president of that time Dwight D. Eisenhower creates the ARPA or Advanced Research Projects Agency. The ARPA was the agancy of the U.S. Department of Defense. The purpose of ARPA was to ensure that the United States avoids further technological surprise. During that time Computer scientists were envisioning the intergalactic computer network. In 1969 the idea became a reality with a connection of four universities' computers. The Arpanet became the first Internet [1].

"January 1, 1983 is considered the official birthday of the Internet. Prior to this, the various computer networks did not have a standard way to communicate with each other. A new communications protocol was established called Transfer Control Protocol/Internetwork Protocol (TCP/IP). This allowed different kinds of computers on different networks to "talk" to each other. ARPANET and the Defense Data Network officially changed to the TCP/IP standard on January 1, 1983, hence the birth of the Internet. All networks could now be connected by a universal language [2]."

Sources: [1] ARPANET - The First Internet,

[2] The Brief History of Internet,

Image source

The Early Stages of The Internet

"When the Internet was just starting out in the early 1990’s, it was basically college kids and researchers.A user would connect a device called a modem via a serial port to their computer and use a dial-up service. The connection to the Internet was via a telephone line. To access the Internet, all users needed to do was know the telephone number of the connected computer. From this computer, the user can establish connections to other computers. This computer was called a server and provided basic or specific services for users.

This is known as a client/server architecture. A user can dial-up to their school’s e-mail server to check for messages. Then when a user needed to do research they can disconnect and dial-up to the Gopher server. The organization of the Internet was very chaotic and highly decentralized.

There was no central authority at all and every computer was independent of each other. If one server was not working, users could always dial-up another server."

Source: The Evolution of the Internet, From Decentralized to Centralized

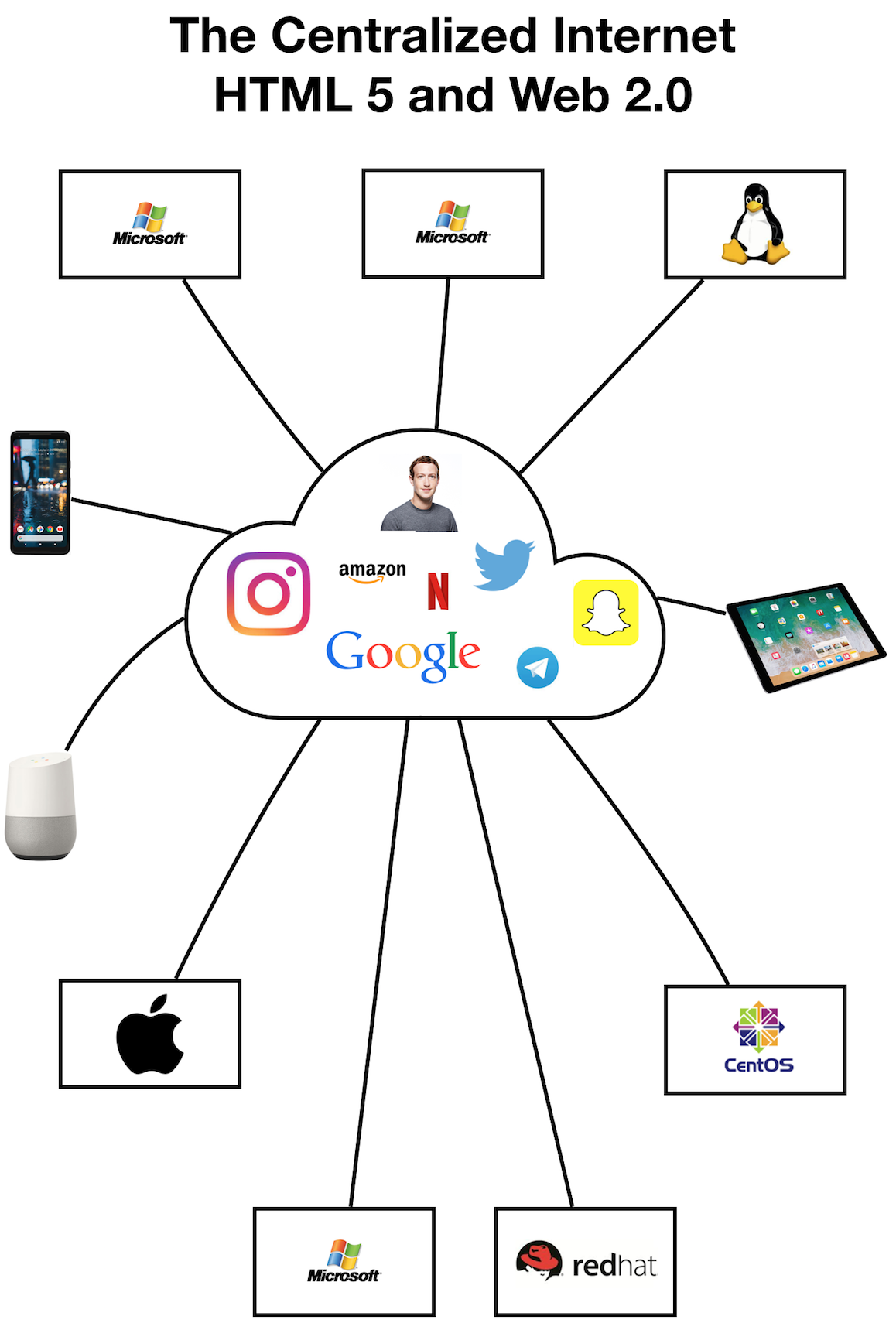

The Centralization of the Internet

"The centralization of the Internet began with it’s commercialization. Companies like AOL began this push as an Internet Service Provider (ISP). Microsoft then bundled Internet Explorer (IE) with the Windows OS starting with Windows 95. By embracing and extending the Internet to Windows users, Microsoft effectively killed off the competition. Netscape closed shop while other browsers like Mozilla were marginalized. Offering IE for free was the starting point for most users since the majority of them had a PC running Windows. Now that they had the software to access the Internet, it would be much easier. All they needed to do next was subscribe to an Internet service.""The problem with this is if the data winds up in the wrong hands. That can be used by malicious actors for more devious purposes. For the most part, the data collected from social media is used more for targeting users by brands. The third party would most likely be ad agencies, marketing firms and research groups. They use analytics on the cold data they get from social media and harvest useful information from them. What they get are insights that is information that provides data about the users of these platforms. The information is then sold, as a product, to brands in order to allow them to target the users for ads and services.

Information is not really free, it has become a commodity that has a price people are willing to pay for. While a centralized platform controls all your data and information you feed it, a decentralized platform would not. There is also more privacy on decentralized platforms as none of your data or activity is being monitored and collected."

Source: The Evolution of the Internet, From Decentralized to Centralized

BitTorrent and Peer-to-peer Protocol

"In 2001 an American computer programmer, named Bram Cohen, began his work on a protocol and a program, which will change the entertainment industry and the interchange of information in Web. The peer-to-peer (P2P) protocol was named BitTorrent, as well as the first file sharing program to use the protocol, also known as BitTorrent [1].""BitTorrent, protocol for sharing large computer files over the Internet. BitTorrent was created in 2001 by Bram Cohen, an American computer programmer who was frustrated by the long download times that he experienced using applications such as FTP.

Files shared with BitTorrent are divided into smaller pieces for distribution among the protocol’s users, called “peers.” A peer who wishes to download a file is directed by a software application called a “client” to access a Web site that hosts a tracker. The tracker keeps records of all the peers who have previously downloaded the file and then allows pieces of their copies of the file to be downloaded by the peer conducting the search. By breaking the file into smaller pieces and allowing peers to download those pieces from each other, BitTorrent uses much less bandwidth than would be the case if all the peers downloaded the complete file from the original source. Once a file is completely downloaded, it becomes a “seed”—that is, a file from which other peers can download pieces. However, BitTorrent can also work without the existence of a seed; a group of peers can share pieces of a file as long as they have among them all the pieces of the original complete file. Some tracker Web sites encourage seeding by penalizing peers who do not seed their files after their downloads are complete.

Many file-sharing Web sites are based on BitTorrent because of its efficient use of bandwidth. The entertainment industry has mounted an active legal campaign against those sites that use BitTorrent to share files of copyrighted material. Success has been limited, however. For example, the operators of the Swedish file-sharing Web site the Pirate Bay were sentenced to prison and fined for copyright infringement in 2010; afterward, however, the Web site still operated and remained extremely popular, receiving about three million visitors per day [2]."

Sources: [1] BitTorrent: Complete Guide — History, Products, Founding, and More,

[2] BitTorrent by Erik Gregersen

The History of Digital Money (coming next)

DigiCash

DigiCash by David Chaum

HashCash

"Hashcash - A Denial of Service Counter-Measure" by Adam Back

Bit Gold

"Bit Gold" by Nick Szabo

B-money

"B-money" by Wei Dai

Bitcoin

"Bitcoin: A Peer-to-Peer Electronic Cash System" by Satoshi Nakomoto